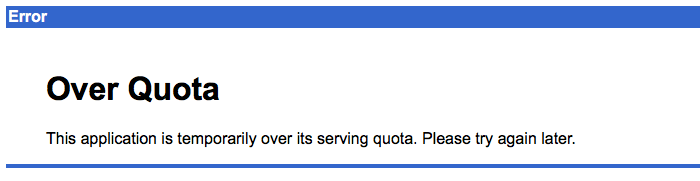

I've recently ran into these issues myself when I implemented a Twitter auto-unfollow functionality for my new website (AtariGamer.com). I ran into this issue due to the nature of Twitter's API and its own usage limits - I had to split work up into a Cron job that kicked everything off, followed by a number of tasks on the push queue. What I didn't realise was due to the default (F1 autoscaling) I had new instances of my app started to serve these 'background' requests as a front-end instance and that quickly made me go over the 28hr/day quota and the entire site went down shortly after.

With over 5 hours until my quota refreshed I started to look into this deeper. I knew that there were two separate quotas, 28 hours for the front-end and 9 hours for the back-end instances. I realised then that what I needed to do was to make sure that all of my Cron and Push Queue tasks were being executed on the back-end instance. Simple!

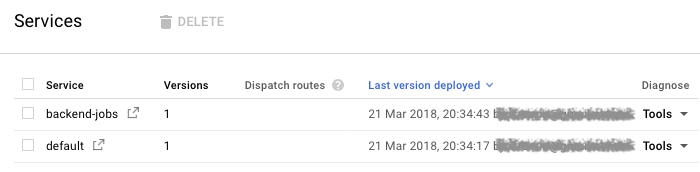

That led me to this page - Configuration Files. Specifically, I was interested in defining a separate service for my backend jobs. So, I created a file called backend-jobs.yaml and added the following content to it...

backend-jobs.yaml

runtime: php55

api_version: 1

service: backend-jobs

instance_class: B1

basic_scaling:

idle_timeout: 10m

max_instances: 1

handlers:

- url: /xxxxxx/.*

script: atga/routes.php

login: admin

- url: /_ah/start

script: atga/routes.php

What the above file does is it defines a B1 instance that responds to some handlers ie. URLs that get requested by the Cron and Push Queue tasks. The main part here is the handler for "url: /xxxxxx/.*" - this routes all my requests to routes.php, which in turn interprets the URL and calls the appropriate controller (I'm using Silex for this). The "url: /_ah/start" handler is also required and must return a HTTP status of 200 or the backend instance of the app will not start.

My PHP code for the backend jobs stayed the same, it was just the service definition that changed. I then deployed this backend service using the gcloud tool...

Command

gcloud app deploy backend-jobs.yaml

With the backend service defined I could now go ahead with the necessary changes to the queue.yaml file. The only change I had to do was add a target definition that pointed to the backend service I've just created...

queue.yaml

queue:

- name: xxxxx

...

target: backend-jobs

Then each of the Cron jobs in the cron.yaml file was also updated in a similar fashion...

cron.yaml

cron:

- description: XXXXX

...

target: backend-jobs

This was followed by redeploying my Cron and Task Queue definitions...

Command

gcloud app deploy queue.yaml

gcloud app deploy cron.yaml

That was it! From this point on all of my backend processing tasks were being run on a separate B1 instance and all of the usual front-end requests were being served by the F1 default instance I've always had. I did notice that while I was still over quota on the F1 instance, the B1 instance would not start, but once the quota was refreshed, everything worked as expected.

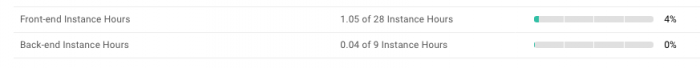

Best of all, no PHP code needed changing! I could also see, in the App Engine Quotas page that both the front-end and back-end instances were being used as expected.

Now I don't have to worry about exhausting my front-end instance hours with my background processing tasks. All it took was a few configuration file changes and voila!

-i